Table of Contents

Introduction to RAG and MCP

In AI development, two primary approaches for working with large language models (LLMs) are Retrieval-Augmented Generation (RAG) and Model Context Protocol (MCP). This article compares these methods to help you decide the best option for your particular use case.

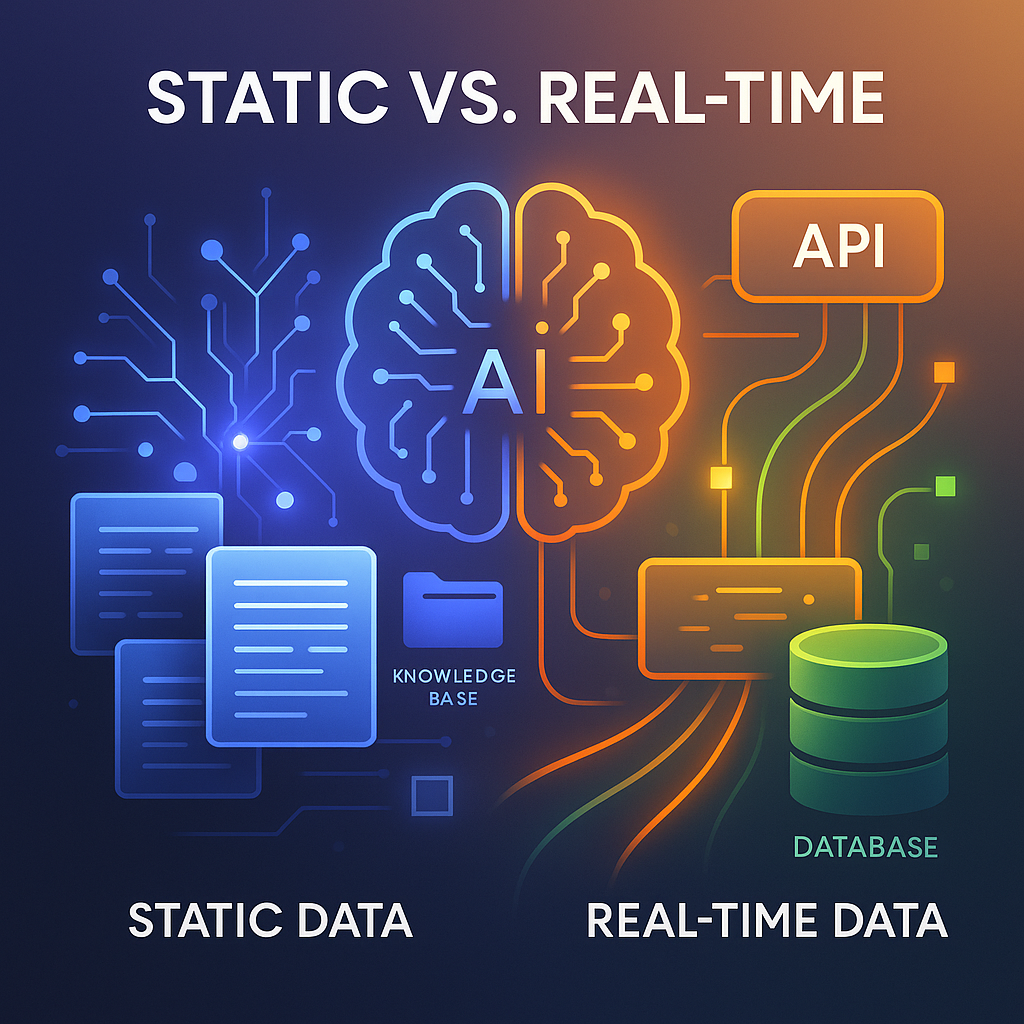

RAG and MCP represent two distinct but complementary approaches for incorporating LLMs into AI workflows. RAG improves an LLM’s ability to generate responses by using a document repository, anchoring the model’s outputs in existing knowledge to ensure that the answers are both relevant and traceable. The process starts by indexing a knowledge base, such as manuals or documents, and encoding them in a searchable format. When a query is made, the system searches the indexed content, retrieves relevant documents, and uses them to enhance the LLM’s input. This allows the model to offer responses that combine both its trained knowledge and the retrieved data.

In contrast, MCP allows the LLM to interact with external systems, databases, and tools in real time. MCP integrates external interactions, such as API calls or database queries, into the model’s decision-making process. The model uses defined tools to perform tasks, passing necessary arguments to retrieve live data or perform specific actions. The results are then sent back to the model, where they are incorporated into the response.

While RAG is optimal for static knowledge scenarios, such as answering policy questions or referencing manuals, MCP is more effective when live data or real-time actions are needed, like querying real-time inventory or executing workflows. Many systems use both RAG and MCP, relying on RAG for knowledge retrieval and MCP for real-time interactions.

Understanding Retrieval-Augmented Generation (RAG)

RAG is a technique that enhances AI responses by combining information retrieval with generative models. Its main concept is to use existing documents or databases to provide context before generating a response. This is particularly helpful when specific, verifiable information must be included to ensure the response’s accuracy.

RAG operates by indexing a collection of knowledge, such as articles or manuals, and using retrieval to identify the most relevant content. This information is then provided as context to the LLM, allowing it to generate an informed and relevant response that is rooted in factual content.

Embeddings are key to RAG, as they allow documents and queries to be represented in vector spaces. These embeddings capture meaning, enabling retrieval that goes beyond simple keyword matching. Techniques such as vector search or TF-IDF are used to quickly find relevant content.

RAG is highly effective in situations that require static, well-documented knowledge. For example, customer support systems can use RAG to retrieve policy documents or troubleshooting guides, ensuring responses align with official documentation. Likewise, academic research tools can use RAG to fetch research papers relevant to user queries.

Though RAG is excellent for static knowledge, it has limitations, especially regarding the quality and freshness of the indexed content. If the knowledge base is outdated or incomplete, the system might return inaccurate responses. Regular updates are crucial for maintaining the effectiveness of RAG systems.

Exploring Model Context Protocol (MCP)

MCP is a robust framework that enables external tools, APIs, and databases to be integrated into AI systems. Unlike RAG, which uses static data, MCP processes real-time data and manages dynamic interactions. MCP allows AI models to access external systems and execute tasks based on live data, offering far more interactivity than RAG.

In MCP, the model communicates with external tools through structured requests. These tools are registered within the system, each with clear input/output specifications, names, and descriptions. When a task requires live data—like querying a database for current stock levels or calling an API for weather information—the model selects the appropriate tool and sends the necessary parameters. The system runs the tool, retrieves the result, and sends it back to the model for further processing.

MCP is particularly valuable for tasks that need real-time information, such as in e-commerce, financial services, or customer support systems. For example, if a customer asks about product stock levels, MCP will query the live inventory database. Similarly, if an AI assistant needs to update a user profile, MCP can interact with the relevant API to perform the update.

MCP supports multi-step workflows, allowing the model to plan and sequence tool calls based on incoming data. This makes MCP highly flexible for dynamic tasks, setting it apart from RAG, which is best at retrieving static data. MCP is ideal for applications involving decision-making and real-time actions.

Choosing Between RAG and MCP

When choosing between RAG and MCP, consider the task’s nature and the type of data involved. RAG is better suited for retrieving information from a structured knowledge base, making it perfect for static information that doesn’t change often, like company policies, product specifications, or academic papers. RAG excels at retrieving relevant content and grounding responses in documented facts.

MCP, on the other hand, is more suited for tasks requiring live data, dynamic actions, or interaction with external systems. For instance, retrieving current stock levels, processing user requests, or calling APIs for live data are tasks that MCP handles well. By integrating external tools and APIs, MCP offers more interactive, action-driven responses than RAG.

In many cases, combining RAG and MCP is the best approach. A typical workflow involves using RAG to retrieve static knowledge and MCP to manage real-time actions. For example, in customer support, RAG might retrieve a product policy, MCP could trigger an order replacement, and RAG would then explain the policy based on the retrieved information.

The decision ultimately comes down to whether the task needs static knowledge retrieval or dynamic, real-time interaction. Using both RAG for knowledge retrieval and MCP for actions offers a comprehensive solution.

Pitfalls and Challenges of RAG and MCP

Both RAG and MCP present challenges that should be considered when designing effective AI systems. The main issue with RAG is the reliance on outdated or missing content. If the indexed documents are outdated, the retrieval process may fail to find accurate information, which can hurt response quality. RAG systems break documents into smaller chunks for indexing, and if relevant information is split or phrased differently, the retriever might miss it. Regular updates and better chunking strategies can help resolve this problem.

Another issue with RAG is prompt overload. Retrieving too many chunks, especially irrelevant ones, can overload the model’s context window, lowering the accuracy of the responses. Limiting the number of retrieved chunks and focusing on the most relevant content can help mitigate this issue.

MCP also faces challenges, especially when external tools are poorly defined. If tools lack clear input/output specifications or descriptions, the model may have difficulty using them correctly, resulting in failures when performing actions. To solve this, tools need to have well-defined specifications and input/output schemas.

Security risks also arise with MCP, especially when the model has the ability to perform actions. Ensuring that proper authentication and authorization measures are in place can help prevent misuse and secure the system.

Hybrid Workflows: Combining RAG and MCP

Combining RAG and MCP offers an effective solution for AI systems that need both contextual grounding and real-time actions. Many real-world applications require both retrieving static information and interacting with dynamic data or performing actions. By combining both methods, developers can create workflows that leverage the strengths of each approach.

In a hybrid workflow, RAG retrieves relevant information from a knowledge base, providing the model with context to answer queries. Then, MCP can be used to interact with APIs, databases, or external tools, managing real-time data and making the system responsive to dynamic conditions. Once the action is completed, RAG can be used again to explain the result or offer a detailed response, ensuring it’s grounded in retrieved knowledge.

For instance, in customer support, RAG could fetch the return policy, while MCP checks the return status via an API. After obtaining the return status, RAG can explain the policy’s implications based on the current return status.

Hybrid workflows are especially valuable for applications that require both accurate, traceable responses and real-time actions. They allow AI systems to automate processes, manage data, and interact with external systems, making them more efficient and capable.

Conclusions

RAG and MCP each offer unique benefits for different task types. RAG is ideal for retrieving traceable information from static sources, while MCP excels at handling live data and real-time actions. Combining both approaches in a hybrid workflow can provide the most effective solution.

For AI systems that require both static and dynamic data handling, integrating cloud servers can provide the necessary flexibility. Cloud infrastructure can scale dynamically as data needs change, ensuring that RAG and MCP processes are supported with the proper resources. Whether hosting a knowledge base for RAG or providing computing power for real-time MCP actions, cloud services can ensure that both processes run smoothly, securely, and with minimal downtime.

How to Leverage Cloud Servers:

Step 1: Deploy a cloud server in a region close to your user base (e.g., Frankfurt for EU users or São Paulo for Brazilian audiences) to ensure low latency for both RAG and MCP tasks. Choose a region that best fits your data needs.

Step 2: Choose an operating system like Ubuntu or Alma Linux and select necessary add-ons such as MySQL for database management or VPN servers for secure connections.

Step 3: Set up a knowledge base for RAG or integrate APIs for MCP to ensure smooth interaction with your dynamic workflows. With scalable infrastructure, you can add or remove resources as needed, making it adaptable to your project’s requirements.

Benefit of Cloud Servers: Cloud servers offer a flexible, scalable, and secure infrastructure solution that supports both static and dynamic workflows for AI systems, ensuring reliable performance and cost-effective resource management.

.faq-container {

margin: 20px auto;

padding: 15px 20px;

background: #fafafa;

border-radius: 12px;

box-shadow: 0 4px 10px rgba(0,0,0,0.05);

box-sizing: border-box;

}

.faq-item {

border-bottom: 1px solid #ddd;

}

.faq-item:last-child {

border-bottom: none;

}

.faq-question {

margin: 0;

padding: 15px;

color: #2c3e50;

cursor: pointer;

background: #f0f0f0;

border-radius: 8px;

transition: background 0.3s;

display: flex;

align-items: center;

justify-content: space-between;

}

.faq-question:hover {

background: #e0e0e0;

}

.faq-text {

flex: 1;

text-align: left;

}

.faq-icon {

flex-shrink: 0;

margin-left: 12px;

color: #555;

transition: transform 0.3s ease;

}

.faq-item.active .faq-icon {

transform: rotate(90deg);

}

.faq-answer {

max-height: 0;

overflow: hidden;

transition: max-height 0.4s ease, padding 0.3s ease;

padding: 0 15px;

color: #555;

line-height: 1.6;

}

.faq-item.active .faq-answer {

max-height: 500px;

padding: 10px 15px;

}

@media (max-width: 600px) {

.faq-container {

padding: 10px 15px;

}

.faq-question {

padding: 12px;

}

}

What is Retrieval-Augmented Generation (RAG) and how does it work?

RAG enhances LLMs by retrieving relevant documents from external sources before generating responses. It involves indexing a knowledge base, performing a search to find pertinent information, and incorporating this data into the model’s prompt to ground its output in factual content. This approach is ideal for tasks requiring up-to-date or domain-specific information.

What is Model Context Protocol (MCP) and how does it differ from RAG?

MCP is an open standard that enables LLMs to interact with external systems, APIs, and tools in real-time. Unlike RAG, which retrieves static documents, MCP allows dynamic interactions, such as querying databases or executing functions, providing LLMs with live data and capabilities to perform actions beyond text generation.

When should I use RAG over MCP?

Use RAG when your application requires grounding responses in static, authoritative documents like manuals, policies, or research papers. It’s suitable for scenarios where the information doesn’t change frequently and needs to be referenced accurately.

When is MCP the better choice over RAG?

Opt for MCP when your application needs to perform real-time actions, such as querying live databases, interacting with APIs, or executing workflows. It’s ideal for dynamic tasks that require up-to-date information and system interactions.

Can RAG and MCP be used together in a single system?

Yes, combining RAG and MCP allows for a hybrid approach where RAG retrieves static knowledge to ground responses, and MCP handles real-time interactions and actions. This integration provides both accurate information and dynamic capabilities in AI systems.

What are the security considerations when implementing MCP?

MCP implementations should ensure robust security by adopting practices like ephemeral authentication, avoiding static secrets, and enforcing least privilege access controls. Identity fragmentation can lead to vulnerabilities, so a unified identity management approach is recommended to mitigate risks.

How can I structure my data for optimal RAG performance?

For effective RAG, structure your data by breaking documents into manageable chunks, indexing them using appropriate embeddings, and organizing them in a vector database. Regularly update the knowledge base to maintain the relevance and accuracy of the retrieved information.

What are common pitfalls in RAG implementations?

Common issues in RAG include outdated or incomplete knowledge bases, improper chunking strategies leading to irrelevant information retrieval, and prompt overload from excessive context. Regular updates, careful chunking, and limiting retrieved content can help mitigate these problems.

What are the scalability considerations for MCP deployments?

Scalability in MCP deployments can be achieved by containerizing services, utilizing orchestration tools, and ensuring modular architecture. This approach allows for efficient scaling of components to handle varying loads and maintain performance.

How do RAG and MCP impact the reliability of AI systems?

RAG enhances reliability by providing grounded, factual information, reducing the likelihood of hallucinations. MCP improves reliability by enabling real-time interactions and actions, ensuring that AI systems can respond accurately to dynamic queries and perform necessary tasks.

document.querySelectorAll(‘.faq-question’).forEach(q => {

q.addEventListener(‘click’, () => {

const item = q.parentElement;

item.classList.toggle(‘active’);

});

});